RLHF & Human Values Project

Introduction

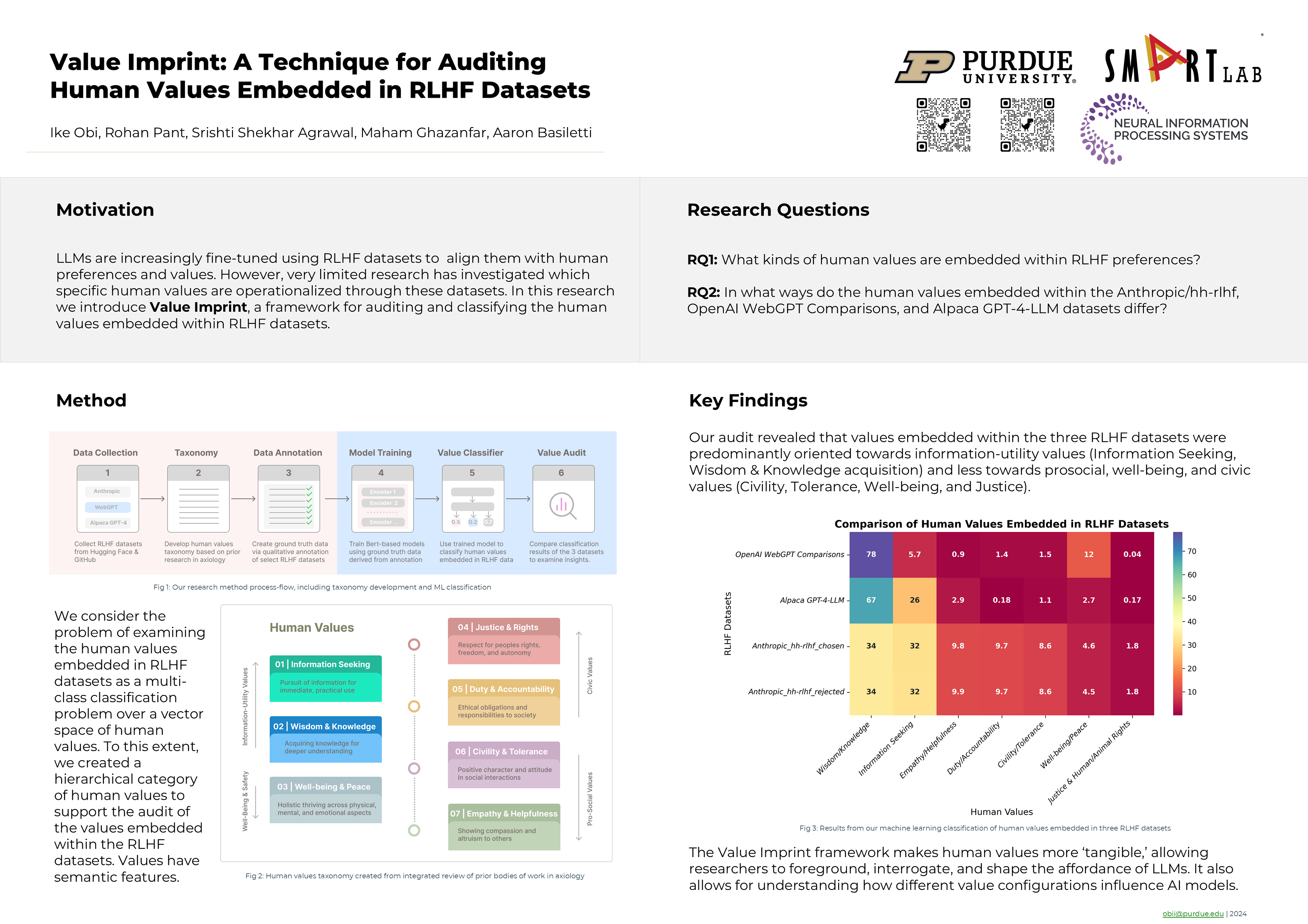

LLMs are increasingly fine-tuned using RLHF datasets to align them with human preferences and values. However, very limited research has investigated which specific human values are operationalized through these datasets. In this paper, we introduce Value Imprint, a framework for auditing and classifying the human values embedded within RLHF datasets. To investigate the viability of this framework, we conducted three case study experiments by auditing the Anthropic/hh-rlhf, OpenAI WebGPT Comparisons, and Alpaca GPT-4-LLM datasets to examine the human values embedded within them. Through this approach, we discovered that information-utility values, including Wisdom/Knowledge and Information Seeking, were the most dominant human values within all three RLHF datasets. In contrast, prosocial and democratic values, including Well-being, Justice, and Human/Animal Rights, were the least represented human values. These findings have significant implications for developing language models that align with societal values and norms. We contribute the following to support further research in this area :

- We introduce a technique for auditing and classifying the underlying human values embedded within RLHF preferences, providing AI researchers with a technique for auditing and interrogating the quality of RLHF datasets.

- We conduct three case study experiments using this approach and through our findings reveal that Wisdom/Knowledge and Information Seeking were the most dominant human values within the datasets; validating our technique.

- We contribute both our ground truth annotation and classification datasets and, through this means, provide researchers with the pathway to take this work forward.

Methodology

The dataset we used for this study was colleced from Huggingface, an open-source platform that provides tools and resources for AI practitioners to help them build, deploy, and train machine learning models. Collecting the dataset from Huggingface allowed us to review the community engagement around each RLHF dataset, including the number of downloads, likes, and the number of AI models trained with the dataset. Using this approach, we collected RLHF datasets that represented high interest within the Huggingface community.

Following the data collection, we transitioned to content analysis of the dataset in preparation for curating high-quality samples that we will use to train a model for classifying the entire dataset. For classifying the values, we first developed a dictionary of human values and then categorized them to allow for easier abstraction. We used the hypernyms to hyponyms framework to ensure that the human value categorization is semantically coherent and condensed the human values to their hypernym categories, which we then used for annotating the human values within the dataset.